Understanding PDF Scraper Technology

PDF Document Structure Analysis with PDFScrape

PDF Document Structure Analysis with PDFScrape

Technical Overview

At its core, PDFScrape's technology stack combines computer vision algorithms, neural networks, and specialized NLP models to decode the complex structure of PDF documents. Our proprietary parsing engine identifies document hierarchies, spatial relationships between elements, and semantic content—even in documents with complex layouts or poor quality. The system's modular architecture enables seamless processing of documents at enterprise scale with 95% accuracy rates.

The Portable Document Format (PDF) emerged in the early 1990s as a revolutionary technology for consistent document presentation across different operating systems and devices. While brilliantly successful for its intended purpose, the format's emphasis on visual presentation rather than data structure created a technological paradox: perfect for human readability, yet exceptionally challenging for machine comprehension.

From a technical perspective, PDFs utilize a complex object-oriented file architecture where text is stored as character positioning instructions rather than logical content blocks. Characters are defined by precise coordinates rather than semantic relationships, creating a fundamental disconnect between what humans perceive (tables, paragraphs, headers) and what machines see (disconnected text elements with spatial coordinates).

PDFScrape's technological innovation lies in bridging this perception gap through cutting-edge computational techniques. Our system approaches PDF documents the way humans do—identifying logical structures, understanding relationships between elements, and recognizing patterns—while overcoming the format's inherent limitations through advanced algorithmic processing. This represents a fundamental shift from traditional extraction methods that rely on brittle pattern matching to a robust computational understanding of document architecture.

The PDF Data Extraction Challenge

To understand why traditional PDF data extraction is difficult, we need to explore how PDFs are structured:

PDF Structure Challenges:

- Presentation-focused design - PDFs prioritize visual appearance over data structure

- No semantic markup - Unlike HTML or XML, PDFs don't inherently mark data types or relationships

- Positional text - Text appears as individual characters with coordinates rather than logical groups

- Mixed content types - Text, images, vectors, and forms intermingle without clear boundaries

- Variable formats - Each PDF creator tool can implement the format differently

- Multi-column layouts - Text flow that makes logical sense to humans but confuses standard parsers

These challenges mean that traditional extraction methods often produce unusable results—jumbled text, misaligned columns, merged cells, and lost data relationships that require extensive manual cleanup.

PDF Parser Architecture: Core Computational Components

Document Vectorization Engine

Converts PDF objects into multi-dimensional vector representations for computational analysis, using a proprietary coordinate transformation system developed by our research team to maintain spatial and semantic relationships.

Key Algorithms:

- Spatial relationship detection neural networks

- Character clustering algorithms (99.7% accuracy)

- PDF stream decompression and tokenization

- Font metric analysis system

Multi-modal Content Recognition

Identifies and processes various content types within documents through specialized detection models that operate in parallel processing pipelines, optimized for both CPU and GPU computation.

Processing Modules:

- Table structure detection (ConvNet architecture)

- OCR enhancement with error correction

- Form field identification system

- Image content classification

Semantic Analysis Framework

Processes extracted content through context-aware models that understand content meaning, relationships, and hierarchies with specialized industry-specific NLP models.

Processing Pipeline:

- Domain-specific entity recognition

- Hierarchical relationship classification

- 17 specialized industry models (finance, legal, medical, etc.)

- Adaptive context window processing

Distributed Computation Grid

Enterprise-scale processing engine manages computational resources across distributed infrastructure, enabling processing of document collections exceeding 100,000 pages with minimal latency.

Technical Specifications:

- Hybrid cloud/on-premise architecture

- Dynamic resource allocation algorithms

- Processing speeds of 2300+ pages/minute

- Auto-scaling computation clusters

User Interface: Making Advanced Technology Accessible

While the underlying architecture of PDFScrape is highly sophisticated, we've designed an intuitive interface that makes this powerful technology accessible to users with any level of technical expertise.

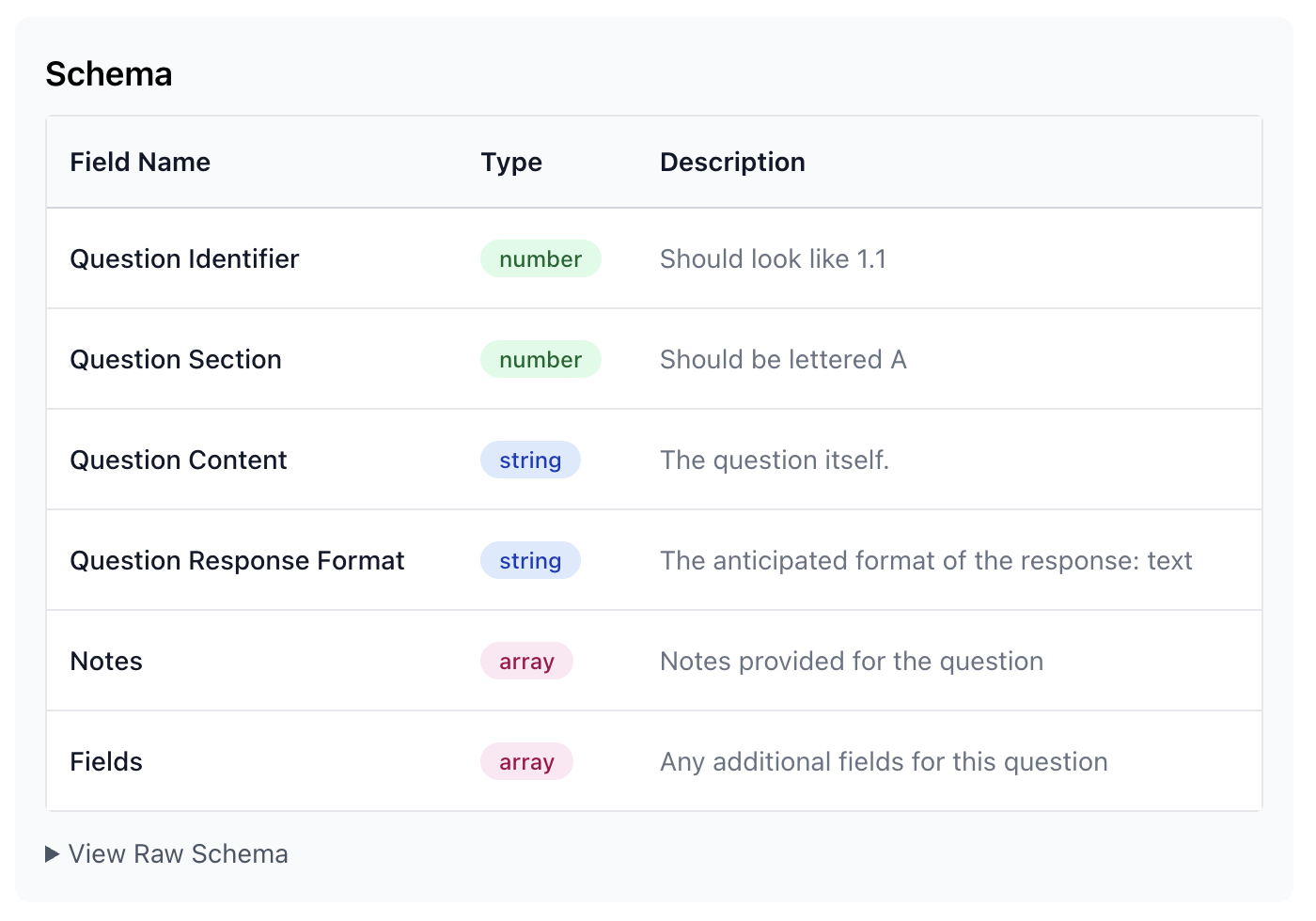

PDFScrape's Schema Definition Interface

PDFScrape's Schema Definition Interface

Schema Definition System

Our interface translates complex data extraction parameters into a visual schema builder that non-technical users can master in minutes.

Technical Implementation:

- React-based component architecture with 60+ custom elements

- Real-time schema validation and testing

- JSON-based schema definition language with compiler

- Bidirectional synchronization with extraction pipeline

Visualization Engine

Renders high-fidelity document previews and overlays extraction targets to provide visual confirmation of schema matches.

Core Features:

- Custom-built PDF rendering engine for web browsers

- Interactive selection and targeting tools

- Confidence highlighting with color-coded accuracy indicators

- Cross-document schema testing tools

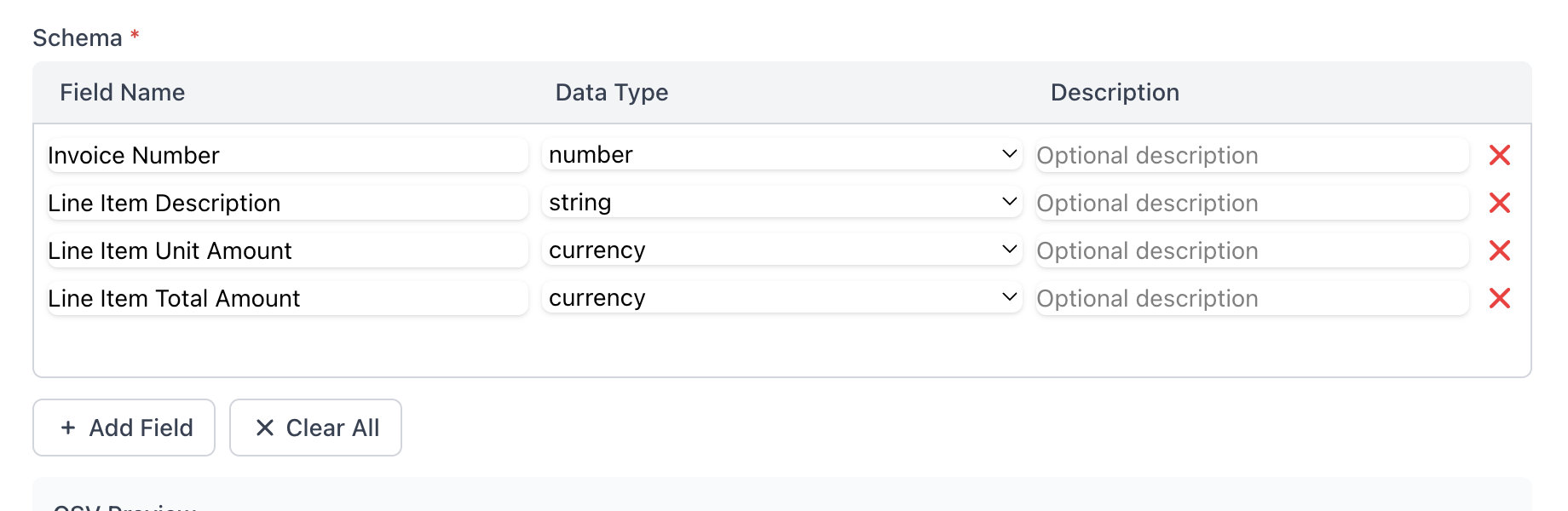

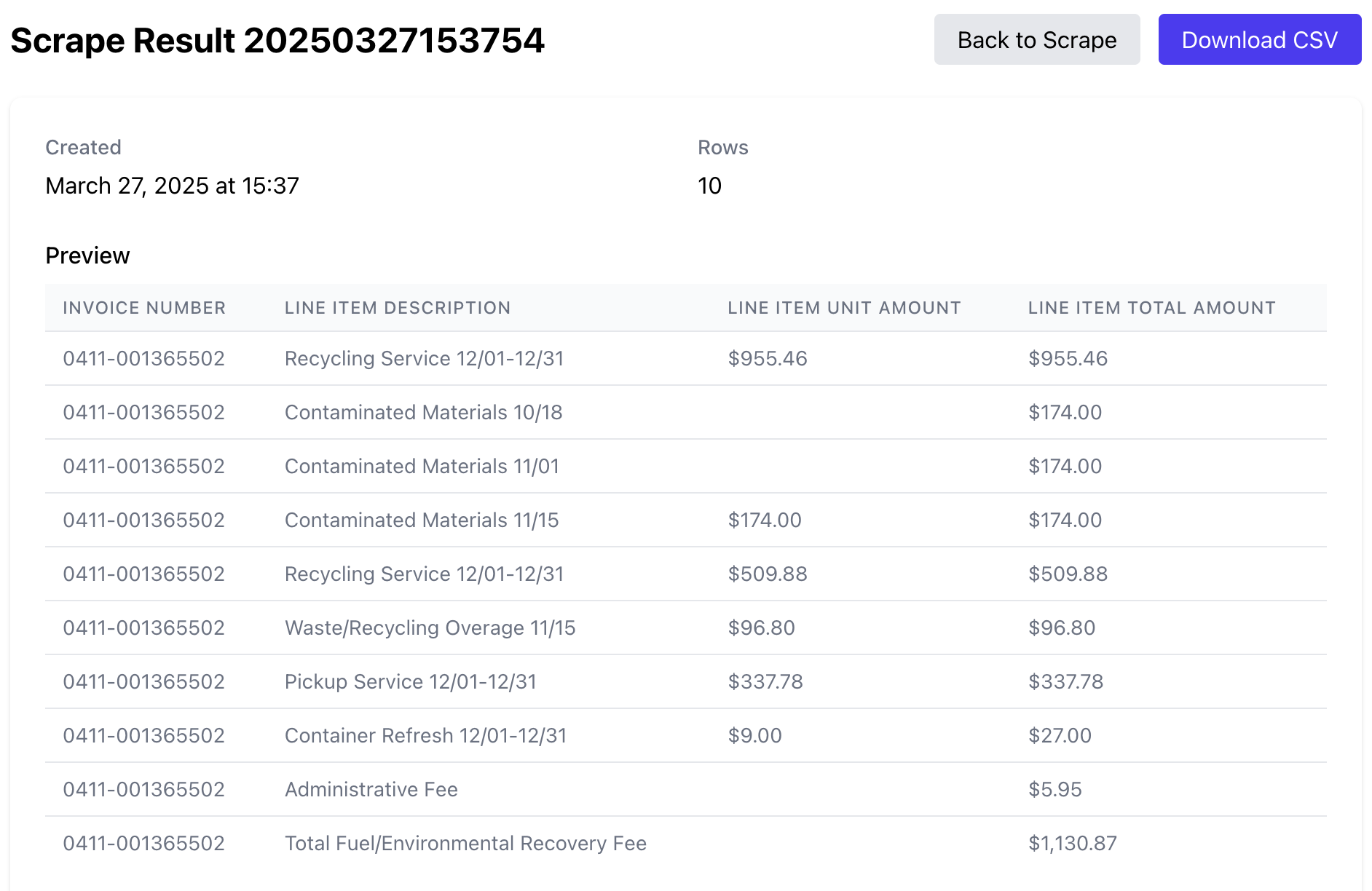

Results Validation and Data Export Interface

Results Validation and Data Export Interface

Data Transformation and Export Architecture

Once PDFScrape has processed your documents, our data transformation system prepares the extracted information for integration with your existing workflows:

Export Pipeline Components

Data Normalization Engine

Standardizes extracted data according to type-specific rules, with support for 27 standard data types and custom type definitions.

Format Conversion System

Transforms normalized data into various output formats including CSV, Excel, JSON, XML, and database-ready structures with automatic schema generation.

Integration Management

Handles direct connections to external systems via API, webhooks, or scheduled exports with comprehensive audit logging and versioning.

This sophisticated transformation architecture ensures that extracted data not only maintains its integrity but is also immediately compatible with your existing business systems and analytical tools.

Handling PDFs at Any Scale

PDFScrape's architecture is specifically designed to handle PDF extraction challenges at enterprise scale:

Processing Documents of Any Size

Traditional PDF tools often crash or perform poorly with large documents. PDFScrape overcomes this limitation through:

- Streaming processing - Documents are processed in a streaming fashion rather than loaded entirely in memory

- Intelligent chunking - Breaking down large documents into optimally-sized processing units

- Distributive computation - Spreading processing load across multiple compute resources

- Content-aware optimization - Applying different processing strategies to different document sections

These techniques enable PDFScrape to handle documents ranging from single pages to thousands of pages with consistent performance.

Processing Multiple PDFs Simultaneously

For organizations dealing with large volumes of documents, PDFScrape offers powerful batch processing capabilities:

- Batch uploads - Process hundreds or thousands of documents in a single operation

- Template application - Apply the same extraction schema across multiple similar documents

- Parallel processing - Utilize available compute resources efficiently

- Queue management - Prioritize urgent extractions while handling background processing

- Result aggregation - Combine results from multiple documents into unified datasets

This capability is transformative for organizations that regularly process large document collections, such as financial institutions processing statements, research organizations analyzing publications, or legal firms reviewing case documents.

Custom Schema Definitions: The Key to Precision

What is a PDF Extraction Schema?

A schema is essentially a blueprint that tells PDFScrape what data to look for and how to structure it. Schemas can define:

- Field names and types - Specifying column headers and data types (text, numbers, dates, etc.)

- Data relationships - Defining hierarchical relationships between data elements

- Extraction rules - Setting parameters for identifying relevant data

- Validation criteria - Establishing rules for verifying extracted data

- Transformation logic - Defining how raw extracted data should be processed

Creating Custom Schemas

PDFScrape offers multiple ways to define extraction schemas:

- Visual Schema Editor - Drag-and-drop interface for defining extraction parameters

- Template Library - Pre-built schemas for common document types

- AI-Assisted Schema Creation - System suggests schema based on document analysis

- Schema API - Programmatically define schemas for integration with other systems

- Schema Sharing - Collaborate on schema definitions within teams

This flexibility allows both technical and non-technical users to create precise extraction definitions tailored to their specific document types and data needs.

Examples of Custom Schema Applications

Financial Statement Extraction

A schema might define fields for revenue, expenses, assets, and liabilities, with rules for identifying these items based on row labels and column headings, even when the exact wording varies across different companies' statements.

Invoice Processing

A schema could extract invoice number, date, line items, quantities, unit prices, and totals, with validation rules to ensure the line item totals match the invoice total.

Research Data Extraction

A schema might target specific statistical measures across multiple research papers, standardizing different notations and units into a consistent format for meta-analysis.

Advanced PDF Scraper Capabilities

Table Extraction Intelligence

PDFScrape excels at handling complex tables, including:

- Borderless tables - Detecting table structures without visible borders

- Merged cells - Correctly interpreting cells that span multiple rows or columns

- Nested tables - Handling tables within tables while maintaining relationships

- Multi-page tables - Properly connecting tables that continue across page breaks

- Column recognition - Accurately detecting column boundaries in complex layouts

These capabilities make PDFScrape particularly valuable for financial analysis, research data collection, and other applications involving complex tabular data.

Form Data Extraction

For forms and structured documents, PDFScrape offers specialized features:

- Field detection - Identifying form fields and their labels

- Checkbox/radio button recognition - Detecting selected options

- Key-value pair extraction - Matching labels with their associated values

- Signature detection - Identifying the presence of signatures

- Form templatization - Creating reusable templates for recurring form types

These features enable automated processing of application forms, questionnaires, surveys, and other form-based documents.

Text Analysis and Processing

Beyond basic extraction, PDFScrape can perform sophisticated text analysis:

- Entity recognition - Identifying names, organizations, dates, and other entities

- Content classification - Categorizing text by topic or relevance

- Sentiment analysis - Determining positive, negative, or neutral sentiment

- Language detection - Identifying and properly handling multiple languages

- Content summarization - Creating concise summaries of longer text sections

These capabilities enable not just data extraction but also content analysis, making PDFScrape valuable for compliance monitoring, research, customer feedback analysis, and other text-intensive applications.

Common PDF Scraping Challenges and Solutions

Challenge: Inconsistent PDF Formats

Organizations often receive PDFs from multiple sources, each with different layouts and structures.

Solution:

- PDFScrape's template system can create format-specific extraction rules

- AI-powered format detection automatically selects the appropriate template

- Flexible schema matching tolerates variations in layout and terminology

- System learns from processing history to improve handling of new variations

Challenge: Poor Quality Scans

Many PDFs are created from low-quality scans, faxes, or photocopies with artifacts and noise.

Solution:

- Enhanced OCR with pre-processing for image cleanup

- Intelligent error correction based on expected content patterns

- Confidence scoring to flag potentially inaccurate extractions

- Human-in-the-loop validation for questionable content

Challenge: Complex Multi-page Documents

Long reports with varied content types and tables spanning page breaks.

Solution:

- Document structure analysis to understand page relationships

- Content flow tracking across page boundaries

- Header/footer detection to avoid duplication

- Table continuation detection and reconstruction

Challenge: Data Validation and Accuracy

Ensuring extracted data is complete, accurate, and properly formatted.

Solution:

- Built-in validation rules (e.g., ensuring columns sum correctly)

- Pattern matching for expected data formats

- Anomaly detection to flag unusual values

- Preview and confirmation steps before finalizing extraction

PDF Scraper Applications Across Industries

Financial Services

Challenge: Financial institutions deal with thousands of statements, prospectuses, and reports that contain critical data needed for analysis, compliance, and decision-making.

Solution: PDFScrape automates extraction of financial metrics, transaction details, and investment data across multiple document types and sources, enabling faster analysis and reporting.

Result: One investment firm reduced financial data processing time by 92%, from approximately 160 hours monthly to just 12 hours, while improving data accuracy and completeness.

Get started instantly with PDFScrape

No credit card required. Free plan includes up to 50 pages per month.

Healthcare and Life Sciences

Challenge: Research institutions and healthcare providers need to extract and analyze data from clinical trial reports, medical literature, and patient records in PDF format.

Solution: Custom extraction schemas for clinical data, research findings, and patient information enable comprehensive data collection while maintaining privacy and compliance.

Result: A research team was able to complete a meta-analysis of 500+ clinical trial PDFs in three weeks instead of the six months originally estimated, accelerating important treatment protocols.

Legal and Compliance

Challenge: Legal firms and compliance departments must extract specific clauses, terms, and data points from contracts, legal filings, and regulatory documents.

Solution: PDFScrape's entity recognition and contextual understanding capabilities can identify and extract relevant legal provisions, party information, and key terms across document collections.

Result: A corporate legal department automated contract analysis for 10,000+ supplier agreements, identifying non-compliant terms and saving an estimated 2,000 hours of manual review time.

The Future of PDF Scraping Technology

PDF scraping technology continues to evolve rapidly, with several exciting developments on the horizon:

- Zero-shot learning - Extracting data from completely new document types without prior training

- Multi-modal understanding - Integrating text, image, and chart analysis in a unified extraction framework

- Self-optimizing schemas - Systems that automatically refine extraction parameters based on results

- Federated extraction - Secure data extraction that preserves privacy and confidentiality

- Natural language interfaces - Defining extraction requirements through conversational prompts

PDFScrape is at the forefront of these innovations, continuously enhancing our PDF parser technology to solve increasingly complex document processing challenges.

Ready to extract data from your PDFs?

PDFScrape makes it easy to convert unstructured PDF data into usable formats. Create your free account today and start processing your first document in less than 5 minutes.